Event-based highly dynamic obstacle avoidance

Abstract: Recent development in the robotics community enabled legged robots to maneuver agilely in complex environments. However, having these dynamic systems to avoid fast-moving obstacles in daily living scenarios is still a remaining challenge that limits deployment in real-world applications. Especially, although accurate detection of highly dynamic obstacles under dynamic robot motions is critical for successful obstacle avoidance, this problem is complex and challenging due to motion blur and the high latency of traditional image sensors. More recently, event cameras have shown great potential in alleviating relevant issues. In this paper, we propose a dynamic obstacle avoidance framework that consists of dynamic obstacle detection and trajectory prediction algorithms without using other sensors, such as depth cameras. Firstly, we present an accurate dynamic obstacle detection and tracking algorithm based on threshold event data. We then utilize Random sample consensus (RANSAC) to track and predict the trajectory of the obstacle positions in 2D pixel coordinates. Finally, we introduce our avoidance framework that operates with predicted 2D obstacle positions. We perform extensive real-world experiments to validate our avoidance framework which performs $61.9\%$ of obstacle avoidance success rate of a kicked ball. Our code and video are publicly available.

Summary

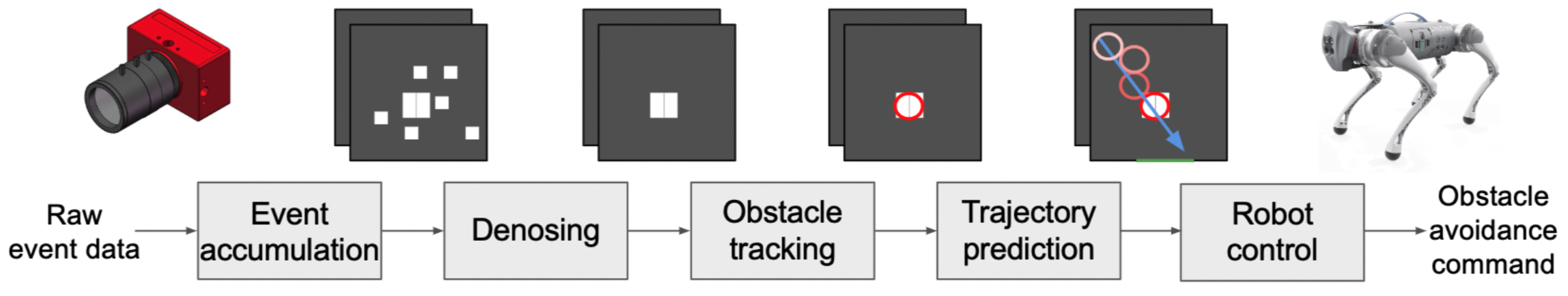

The overall pipeline of our framework is illustrated in the image below. Our cascaded framework consists of multiple steps: event data accumulation, data denoising, obstacle detection and tracking, trajectory prediction, and robot control. We first accumulate the sparse events to support obstacle detection in the next few steps. We then implement an event image denoising algorithm to filter out background noise. Then, the dynamic obstacle detection algorithm is introduced to segment dynamic objects. Based on the sequence of dynamic obstacle positions, we calculate the obstacle direction and predict its future trajectory. The trajectory prediction is converted to y-axis velocity commands which are provided to the robot to avoid the dynamic obstacle.

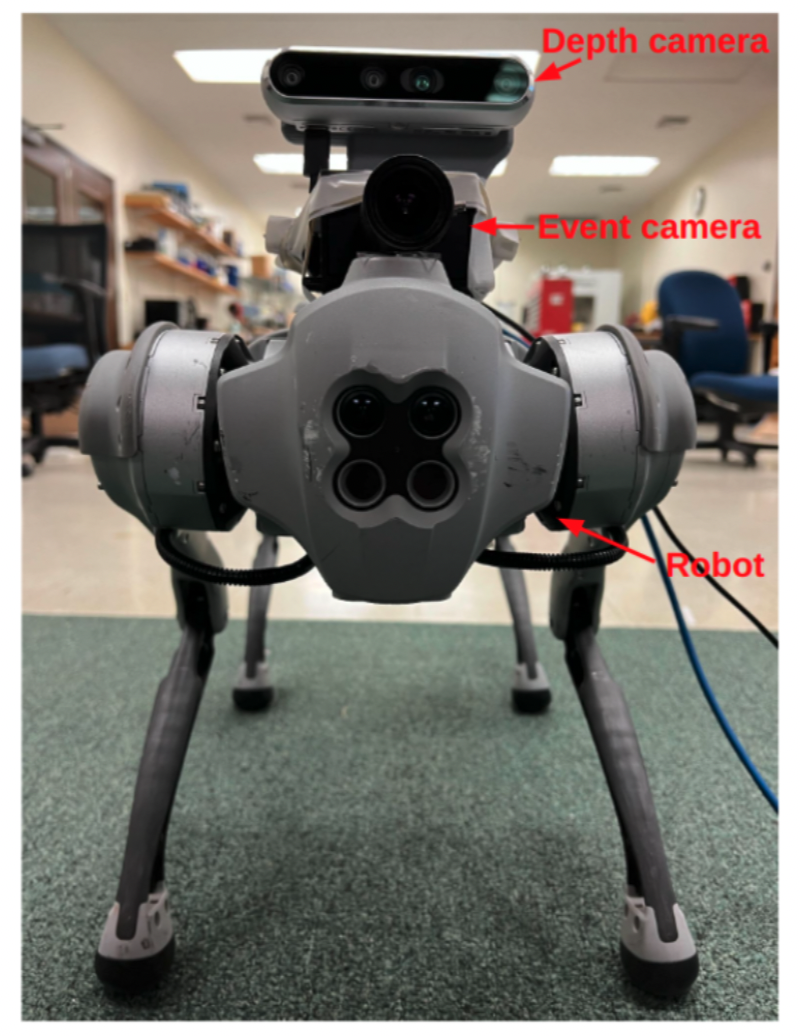

System configuration of the Unitree Go1 robot and the event camera is shown below. The Intel RealSense Depth Camera D455 was only used to visualize the robot-perspective viewpoint and not used for the obstacle avoidance framework.

Our experimental results are recorded below.