Publications

2025

- CVPRW

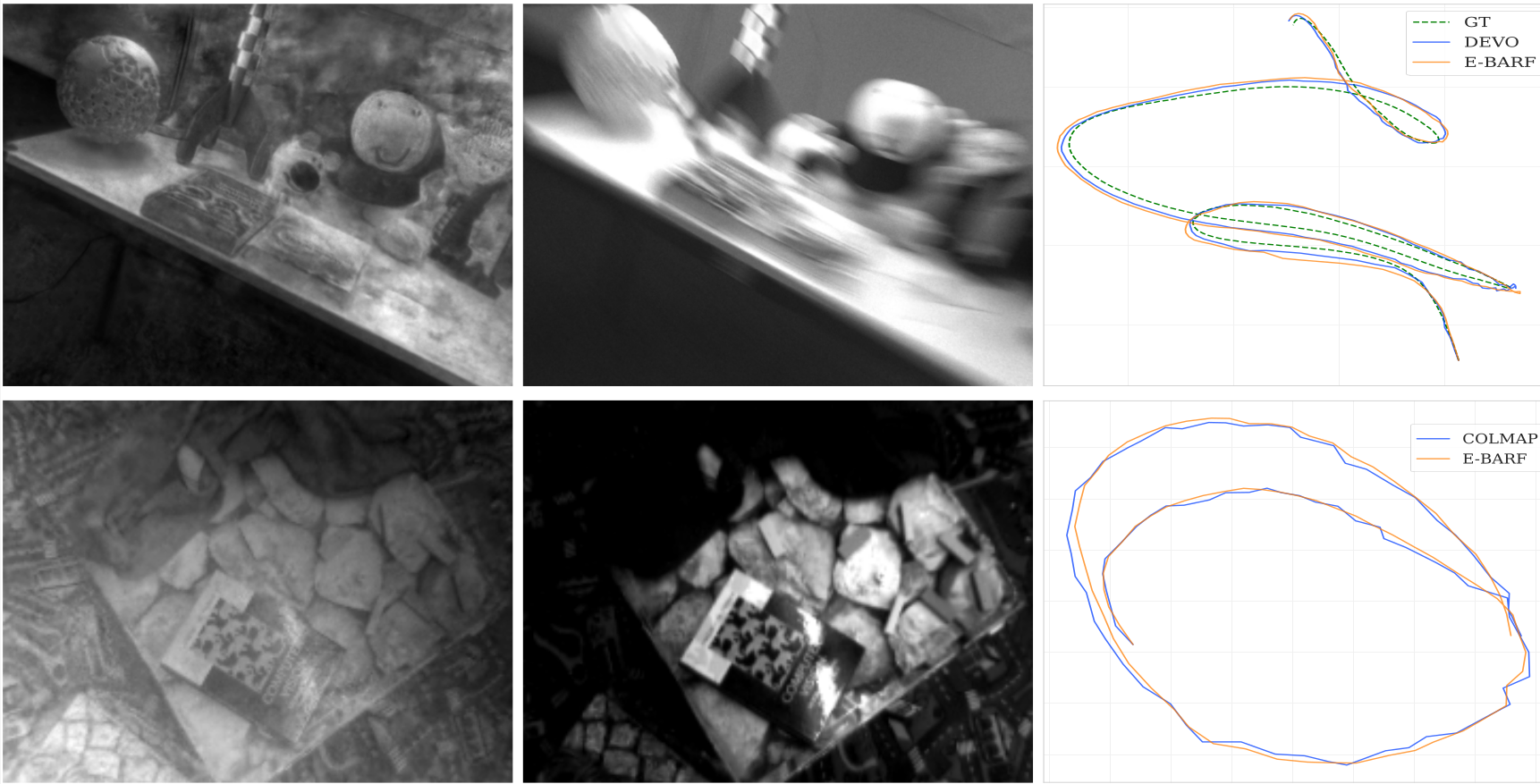

E-BARF: Bundle Adjusting Neural Radiance Fields from a Moving Event CameraZhipeng Tang, Shifan Zhu, Zezhou Cheng, and 2 more authorsCVPRW, 2025

E-BARF: Bundle Adjusting Neural Radiance Fields from a Moving Event CameraZhipeng Tang, Shifan Zhu, Zezhou Cheng, and 2 more authorsCVPRW, 2025Neural radiance field (NeRF) training typically requires high-quality images with precise camera poses. These images are often captured with stationary cameras under favorable lighting conditions. Such constraints limit the use of NeRF models in real-world scenarios. Event cameras, a novel neuromorphic vision sensor, can capture visual information with microsecond temporal resolution in challenging lighting conditions, overcoming conventional camera limitations. Recent research has proposed methods to construct NeRFs from fast-moving event cameras, enabling neural radiance field modeling with handheld cameras in high-dynamic-range environments. However, current methods still rely on precise camera poses as input. To obtain this information, these approaches resort to external sensors like motion capture systems, introducing additional complexities such as sensor synchronization, calibration, and increased device costs. While existing camera pose estimation algorithms could potentially replace external sensors, they lack the accuracy needed for high-quality NeRF synthesis, resulting in blurry and low-quality novel views. To address these challenges, we propose E-BARF, a method that simultaneously trains a NeRF model and adjusts the estimated camera poses. This approach achieves high-quality NeRF reconstruction without relying on external sensors, simplifying the NeRF creation process for event cameras.

@article{tang2025barf, title = {E-BARF: Bundle Adjusting Neural Radiance Fields from a Moving Event Camera}, author = {Tang, Zhipeng and Zhu, Shifan and Cheng, Zezhou and Kim, Donghyun and Learned-Miller, Erik}, journal = {CVPRW}, year = {2025}, }

2024

- IROS

StaccaToe: A Single-Leg Robot that Mimics the Human Leg and ToeNisal Perera, Shangqun Yu, Daniel Marew, and 6 more authorsIROS, 2024

StaccaToe: A Single-Leg Robot that Mimics the Human Leg and ToeNisal Perera, Shangqun Yu, Daniel Marew, and 6 more authorsIROS, 2024We introduce StaccaToe, a human-scale, electric motor-powered single-leg robot designed to rival the agility of human locomotion through two distinctive attributes: an actuated toe and a co-actuation configuration inspired by the human leg. Leveraging the foundational design of HyperLeg’s lower leg mechanism, we develop a stand-alone robot by incorporating new link designs, custom-designed power electronics, and a refined control system. Unlike previous jumping robots that rely on either special mechanisms (e.g., springs and clutches) or hydraulic/pneumatic actuators, StaccaToe employs electric motors without energy storage mechanisms. This choice underscores our ultimate goal of developing a practical, high-performance humanoid robot capable of human-like, stable walking as well as explosive dynamic movements. In this paper, we aim to empirically evaluate the balance capability and the exertion of explosive ground reaction forces of our toe and co-actuation mechanisms. Throughout extensive hardware and controller development, StaccaToe showcases its control fidelity by demonstrating a balanced tip-toe stance and dynamic jump. This study is significant for three key reasons: 1) StaccaToe represents the first human-scale, electric motor-driven single-leg robot to execute dynamic maneuvers without relying on specialized mechanisms; 2) our research provides empirical evidence of the benefits of replicating critical human leg attributes in robotic design; and 3) we explain the design process for creating agile legged robots, the details that have been scantily covered in academic literature.

@article{perera2024staccatoe, title = {StaccaToe: A Single-Leg Robot that Mimics the Human Leg and Toe}, author = {Perera, Nisal and Yu, Shangqun and Marew, Daniel and Tang, Mack and Suzuki, Ken and McCormack, Aidan and Zhu, Shifan and Kim, Yong-Jae and Kim, Donghyun}, journal = {IROS}, year = {2024}, } - RAL

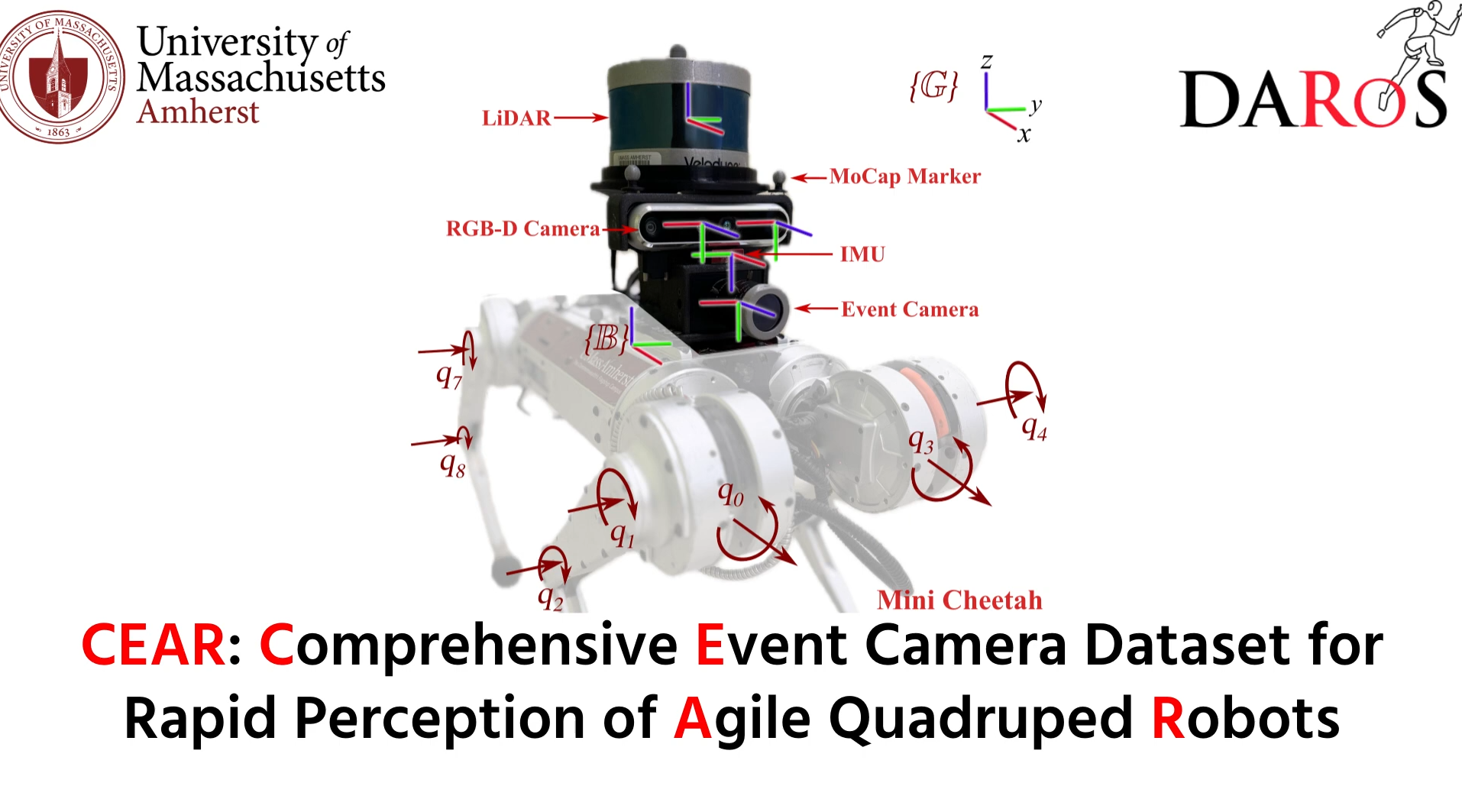

CEAR: Comprehensive Event Camera Dataset for Rapid Perception of Agile Quadruped RobotsShifan Zhu, Zixun Xiong, and Donghyun KimRAL, 2024

CEAR: Comprehensive Event Camera Dataset for Rapid Perception of Agile Quadruped RobotsShifan Zhu, Zixun Xiong, and Donghyun KimRAL, 2024When legged robots perform agile movements, traditional RGB cameras often produce blurred images, posing a challenge for rapid perception. Event cameras have emerged as a promising solution for capturing rapid perception and coping with challenging lighting conditions thanks to their low latency, high temporal resolution, and high dynamic range. However, integrating event cameras into agile-legged robots is still largely unexplored. To bridge this gap, we introduce CEAR, a dataset comprising data from an event camera, an RGB-D camera, an IMU, a LiDAR, and joint encoders, all mounted on a dynamic quadruped, Mini Cheetah robot. This comprehensive dataset features more than 100 sequences from real-world environments, encompassing various indoor and outdoor environments, different lighting conditions, a range of robot gaits (e.g., trotting, bounding, pronking), as well as acrobatic movements like backflip. To our knowledge, this is the first event camera dataset capturing the dynamic and diverse quadruped robot motions under various setups, developed to advance research in rapid perception for quadruped robots. The CEAR dataset is available at https://daroslab.github.io/cear/ .

@article{zhu2024cear, title = {CEAR: Comprehensive Event Camera Dataset for Rapid Perception of Agile Quadruped Robots}, author = {Zhu, Shifan and Xiong, Zixun and Kim, Donghyun}, journal = {RAL}, year = {2024}, } - Ubiquitous Robots

Impedance Matching: Enabling an RL-Based Running Jump in a Quadruped RobotNeil Guan, Shangqun Yu, Shifan Zhu, and 1 more authorUbiquitous Robots, 2024

Impedance Matching: Enabling an RL-Based Running Jump in a Quadruped RobotNeil Guan, Shangqun Yu, Shifan Zhu, and 1 more authorUbiquitous Robots, 2024Replicating the remarkable athleticism seen in animals has long been a challenge in robotics control. Although Reinforcement Learning (RL) has demonstrated significant progress in dynamic legged locomotion control, the substantial sim-to-real gap often hinders the real-world demonstration of truly dynamic movements. We propose a new framework to mitigate this gap through frequency-domain analysis-based impedance matching between simulated and real robots. Our framework offers a structured guideline for parameter selection and the range for dynamics randomization in simulation, thus facilitating a safe sim-to-real transfer. The learned policy using our framework enabled jumps across distances of 55 cm and heights of 38 cm. The results are, to the best of our knowledge, one of the highest and longest running jumps demonstrated by an RL-based control policy in a real quadruped robot. Note that the achieved jumping height is approximately 85% of that obtained from a state-of-the-art trajectory optimization method, which can be seen as the physical limit for the given robot hardware. In addition, our control policy accomplished stable walking at speeds up to 2 m/s in the forward and backward directions, and 1 m/s in the sideway direction.

@article{guan2024impedance, title = {Impedance Matching: Enabling an RL-Based Running Jump in a Quadruped Robot}, author = {Guan, Neil and Yu, Shangqun and Zhu, Shifan and Kim, Donghyun}, journal = {Ubiquitous Robots}, year = {2024}, } - TIM

Automated extrinsic calibration of multi-cameras and lidarXinyu Zhang, Yijin Xiong, Qianxin Qu, and 6 more authorsIEEE Transactions on Instrumentation and Measurement, 2024

Automated extrinsic calibration of multi-cameras and lidarXinyu Zhang, Yijin Xiong, Qianxin Qu, and 6 more authorsIEEE Transactions on Instrumentation and Measurement, 2024In intelligent driving systems, the multisensor fusion perception system comprising multiple cameras and LiDAR has become a crucial component. It is essential to have stable extrinsic parameters among devices in a multisensor fusion system to achieve all-weather sensing with no blind zones. However, prolonged vehicle usage can result in immeasurable sensor offsets that lead to perception deviations. To this end, we have studied multisensor unified calibration, rather than the calibration between a single pair of sensors as previously done. Benefiting from the mutually constrained pose between different sensor pairs, the method improves calibration accuracy by around 20% compared to calibration for a pair of sensors. The study can serve as a foundation for multisensor unified calibration, enabling the overall automatic optimization of all camera and LiDAR sensors onboard a vehicle within a single framework.

@article{zhang2023automated, title = {Automated extrinsic calibration of multi-cameras and lidar}, author = {Zhang, Xinyu and Xiong, Yijin and Qu, Qianxin and Zhu, Shifan and Guo, Shichun and Jin, Dafeng and Zhang, Guoying and Ren, Haibing and Li, Jun}, journal = {IEEE Transactions on Instrumentation and Measurement}, volume = {73}, pages = {1--12}, year = {2024}, publisher = {IEEE} }

2023

- IROS

Event camera-based visual odometry for dynamic motion tracking of a legged robot using adaptive time surfaceShifan Zhu, Zhipeng Tang, Michael Yang, and 2 more authorsIROS, 2023

Event camera-based visual odometry for dynamic motion tracking of a legged robot using adaptive time surfaceShifan Zhu, Zhipeng Tang, Michael Yang, and 2 more authorsIROS, 2023Our paper proposes a direct sparse visual odometry method that combines event and RGBD data to estimate the pose of agile-legged robots during dynamic locomotion and acrobatic behaviors. Event cameras offer high temporal resolution and dynamic range, which can eliminate the issue of blurred RGB images during fast movements. This unique strength holds a potential for accurate pose estimation of agile- legged robots, which has been a challenging problem to tackle. Our framework leverages the benefits of both RGBD and event cameras to achieve robust and accurate pose estimation, even during dynamic maneuvers such as jumping and landing a quadruped robot, the Mini-Cheetah. Our major contributions are threefold: Firstly, we introduce an adaptive time surface (ATS) method that addresses the whiteout and blackout issue in conventional time surfaces by formulating pixel-wise decay rates based on scene complexity and motion speed. Secondly, we develop an effective pixel selection method that directly samples from event data and applies sample filtering through ATS, enabling us to pick pixels on distinct features. Lastly, we propose a nonlinear pose optimization formula that simultaneously performs 3D-2D alignment on both RGB-based and event-based maps and images, allowing the algorithm to fully exploit the benefits of both data streams. We extensively evaluate the performance of our framework on both the public dataset and our own quadruped robot dataset, demonstrating its effectiveness in accurately estimating the pose of agile robots during dynamic movements. Supplemental video: https://youtu.be/-5ieQShOg3M

@article{zhu2021event, title = {Event camera-based visual odometry for dynamic motion tracking of a legged robot using adaptive time surface}, author = {Zhu, Shifan and Tang, Zhipeng and Yang, Michael and Learned-Miller, Erik and Kim, Donghyun}, journal = {IROS}, year = {2023}, } - IROS

Dynamic Object Avoidance using Event-Data for a Quadruped RobotShifan Zhu, Nisal Perera, Shangqun Yu, and 2 more authorsIROS IPPC Workshop, 2023

Dynamic Object Avoidance using Event-Data for a Quadruped RobotShifan Zhu, Nisal Perera, Shangqun Yu, and 2 more authorsIROS IPPC Workshop, 2023As robots increase in agility and encounter fast- moving objects, dynamic object detection and avoidance become notably challenging. Traditional RGB cameras, burdened by motion blur and high latency, often act as the bottleneck. Event cameras have recently emerged as a promising solution for the challenges related to rapid movement. In this paper, we introduce a dynamic object avoidance framework that integrates both event and RGBD cameras. Specifically, this framework first estimates and compensates for the event’s motion to detect dynamic objects. Subsequently, depth data is combined to derive a 3D trajectory. When initiating from a static state, the robot adjusts its height based on the predicted collision point to avoid the dynamic obstacle. Through real-world experiments with the Mini-Cheetah, our approach successfully circumvents dynamic objects at speeds up to 5 m/s, achieving an 83% success rate. Supplemental video:

@article{zhu2023dynamic, title = {Dynamic Object Avoidance using Event-Data for a Quadruped Robot}, author = {Zhu, Shifan and Perera, Nisal and Yu, Shangqun and Hwang, Hochul and Kim, Donghyun}, journal = {IROS IPPC Workshop}, year = {2023}, }

2021

- ICRA

Lifelong Localization in Semi-Dynamic EnvironmentShifan Zhu, Xinyu Zhang, Shichun Guo, and 2 more authorsICRA, 2021

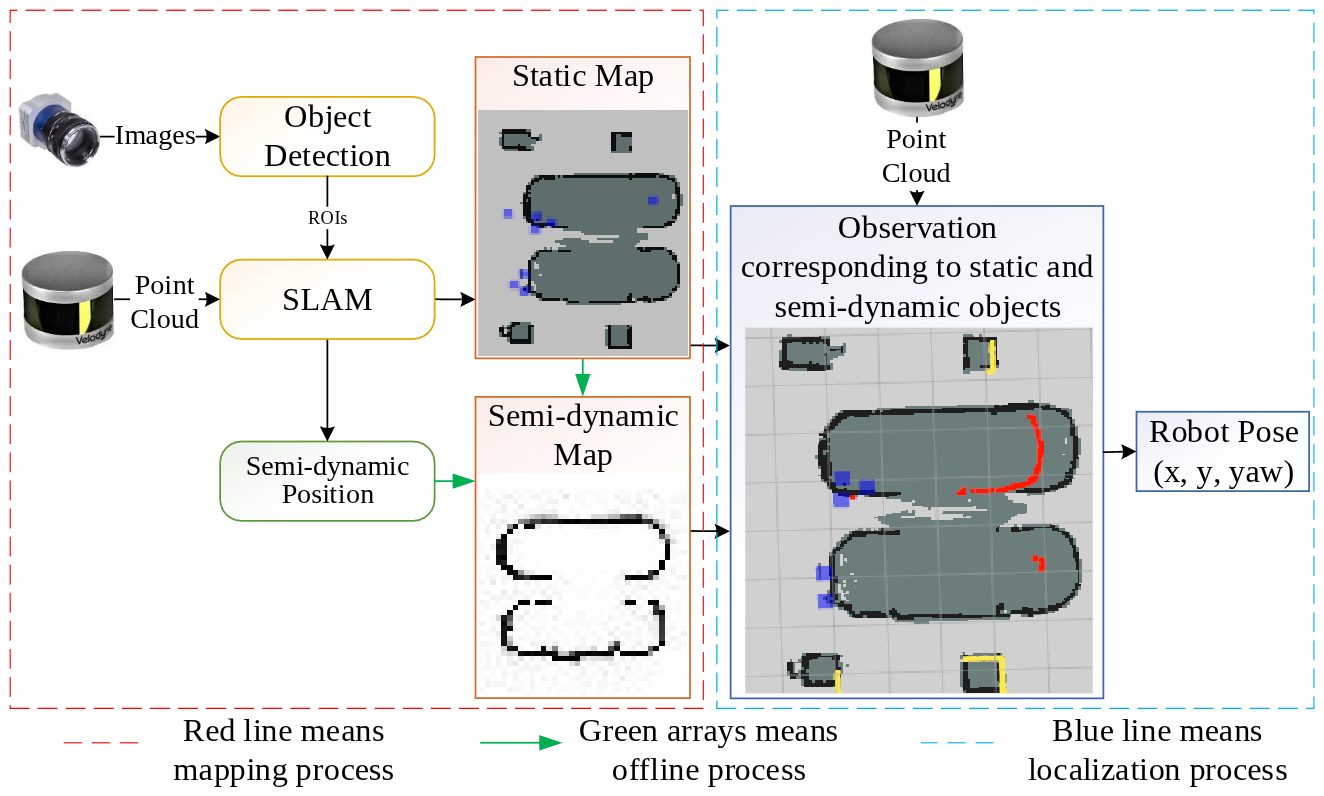

Lifelong Localization in Semi-Dynamic EnvironmentShifan Zhu, Xinyu Zhang, Shichun Guo, and 2 more authorsICRA, 2021Mapping and localization in non-static environments are fundamental problems in robotics. Most of previous methods mainly focus on static and highly dynamic objects in the environment, which may suffer from localization failure in semi-dynamic scenarios without considering objects with lower dynamics, such as parked cars and stopped pedestrians. In this paper, we introduce semantic mapping and lifelong localization approaches to recognize semi-dynamic objects in non-static environments. We also propose a generic framework that can integrate mainstream object detection algorithms with mapping and localization algorithms. The mapping method combines an object detection algorithm and a SLAM algorithm to detect semi-dynamic objects and constructs a semantic map that only contains semi-dynamic objects in the environment. During navigation, the localization method can classify observation corresponding to static and non-static objects respectively and evaluate whether those semi-dynamic objects have moved, to reduce the weight of invalid observation and localization fluctuation. Real-world experiments show that the proposed method can improve the localization accuracy of mobile robots in non-static scenarios.

@article{zhu2021life, author = {Zhu, Shifan and Zhang, Xinyu and Guo, Shichun and Li, Jun and Liu, Huaping}, title = {Lifelong Localization in Semi-Dynamic Environment}, journal = {ICRA}, year = {2021}, } - ICRA

Line-based Automatic Extrinsic Calibration of LiDAR and CameraXinyu Zhang, Shifan Zhu, Shichun Guo, and 2 more authorsICRA, 2021

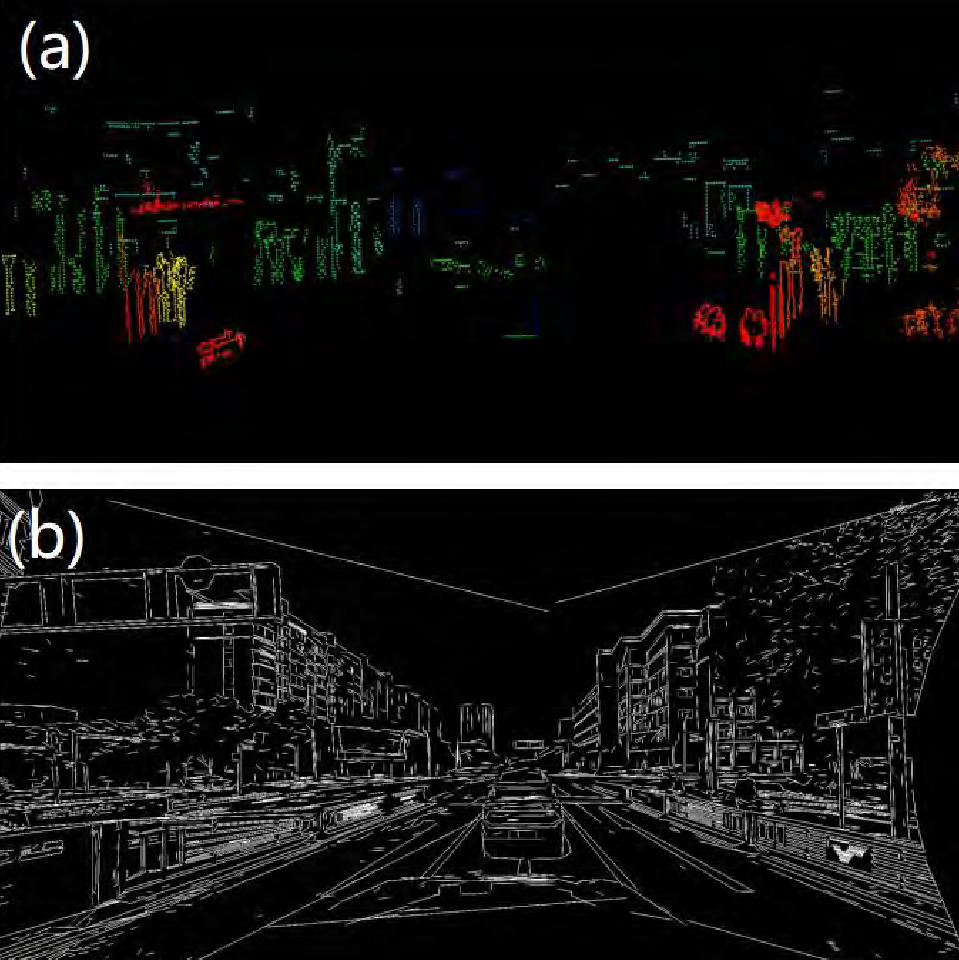

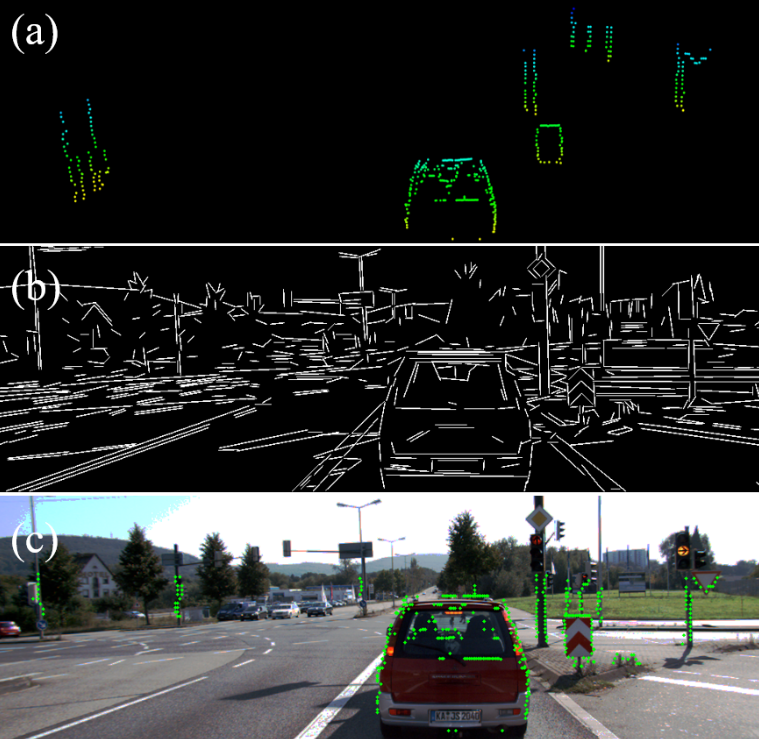

Line-based Automatic Extrinsic Calibration of LiDAR and CameraXinyu Zhang, Shifan Zhu, Shichun Guo, and 2 more authorsICRA, 2021Reliable real-time extrinsic parameters of 3D Light Detection and Ranging (LiDAR) and camera are a key component of multi-modal perception systems. However, extrinsic transformation may drift gradually during operation, which can result in decreased accuracy of perception system. To solve this problem, we propose a line-based method that enables automatic online extrinsic calibration of LiDAR and camera in real-world scenes. Herein, the line feature is selected to constrain the extrinsic parameters for its ubiquity. Initially, the line features are extracted and filtered from point clouds and images. Afterwards, an adaptive optimization is utilized to provide accurate extrinsic parameters. We demonstrate that line features are robust geometric features that can be extracted from point clouds and images, thus contributing to the extrinsic calibration. To demonstrate the benefits of this method, we evaluate it on KITTI benchmark with ground truth value. The experiments verify the accuracy of the calibration approach. In online experiments on hundreds of frames, our approach automatically corrects miscalibration errors and achieves an accuracy of 0.2 degrees, which verifies its applicability in various scenarios. This work can provide basis for perception systems and further improve the performance of other algorithms that utilize these sensors.

@article{zhang2021line, title = {Line-based Automatic Extrinsic Calibration of LiDAR and Camera}, author = {Zhang, Xinyu and Zhu, Shifan and Guo, Shichun and Li, Jun and Liu, Huaping}, journal = {ICRA}, year = {2021}, }